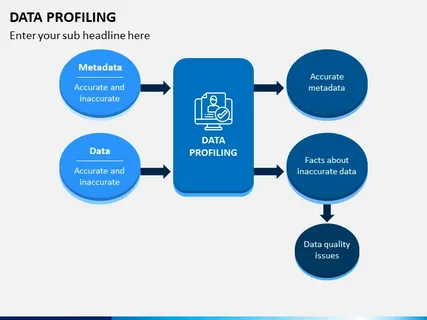

Data is the lifeblood of modern enterprise, yet it often arrives in messy, untamed, and opaque forms. Before we can leverage its power, we must first understand its nature, its structure, and its hidden imperfections. This is the realm of Automated Data Profiling (ADP)—the essential, often-overlooked first step in the journey from raw data to actionable insight.

Think of data science not as a technical discipline, but as archaeology. The data scientist is an archaeologist, sifting through layers of digital sediment—tables, columns, and records—to reconstruct a coherent picture of the past or present reality. ADP is the high-tech ground-penetrating radar this archaeologist uses: a crucial tool that surveys the site before the digging even begins, pinpointing potential treasures, structural faults, and zones of contamination hidden beneath the surface. It uses software to rapidly discover metadata and quality issues in datasets, transforming the daunting task of manual exploration into an efficient, repeatable process.

1. Mapping the Data Landscape: Statistical & Structural Discovery

The initial phase of ADP is all about reconnaissance. The software automatically scans every column in a dataset and calculates fundamental statistics—the min, max, mean, standard deviation, and most frequent values. Crucially, it also examines the structural integrity. It checks for the completeness of the data (percentage of nulls), the uniqueness of key fields, and the presence of logical constraints.

This automated mapping gives developers and analysts a foundational understanding. For example, in a massive customer dataset, ADP might instantly reveal that a supposedly unique CustomerID column has a 2% duplication rate—a massive data quality issue—or that the OrderDate column, which should only contain dates from the last year, has records dating back to 1980. This swift discovery of fundamental errors saves weeks of manual investigation, allowing the team to move quickly toward remediation and sophisticated analysis. If you’re looking to deepen your expertise in this area, specialized data science classes in Bangalore often emphasize these initial, critical steps of data preparation.

2. The Power of Inferred Metadata and Relationships

Beyond simple statistics, powerful ADP tools excel at metadata inference. They don’t just read the column headers; they analyze the values within to infer semantic meaning and relationships. A column labeled CD could mean “Compact Disc,” “Certificate of Deposit,” or “Country Dialing Code.” ADP algorithms examine patterns—like specific lengths, delimiters, and character sets—to suggest the likely domain, perhaps classifying it as a “two-letter ISO Country Code.”

Furthermore, ADP actively seeks out inter-table relationships.1 By automatically detecting columns with high value overlaps across different tables (e.g., a UserID in a Customer table matching a User_ID in an Orders table), it can suggest foreign-key relationships.2 This feature is a game-changer when integrating data from disparate, undocumented sources, allowing analysts to stitch together a unified view of the enterprise without relying on exhaustive, often outdated, data documentation.

3. Case Study: Mitigating Risk in Financial Compliance

A major international bank was facing challenges in integrating data from a recently acquired subsidiary for regulatory reporting. The deadline was tight.

The Challenge: Over 500 tables of unfamiliar transaction and account data needed to be mapped to the bank’s central data model.

ADP Solution: The bank used automated profiling to scan the subsidiary’s entire data warehouse in 48 hours. It immediately flagged several critical issues: inconsistent date formats, missing values in required regulatory fields (like CountryOfOrigin), and unexpected text data in columns designated for numeric currency amounts.

The Impact: By identifying these data quality anomalies before the integration began, the bank avoided filing an incorrect regulatory report, saving millions in potential fines and preventing significant reputational damage. This proactive quality check underscored the necessity of robust data governance skills, a cornerstone taught in leading data science classes in Bangalore.

4. Case Study: Accelerating E-commerce Personalization

An e-commerce giant wanted to launch a new recommendation engine but first needed to consolidate customer activity across its website, mobile app, and physical store purchase systems.

The Challenge: The three systems used different identifiers for the same customer (e.g., web_ID, app_UUID, and loyalty_card_number).

ADP Solution: Automated profiling was deployed to calculate Value Overlap and Clustering scores across these different identifier fields. The system successfully found a high correlation between the identifiers, suggesting a common underlying customer, even where the formats differed.

The Impact: This allowed the data engineers to quickly build a Master Customer Record, stitching all data points together. The recommendation engine launched on time, immediately improving click-through rates by 15% due to the more complete, unified customer view, highlighting the direct business value of clean data. This kind of practical application is central to advanced data science classes in Bangalore.

5. Case Study: Ensuring Public Health Data Integrity

A state health department received daily patient records from hundreds of clinics and hospitals during a public health crisis. The data was essential for tracking infection rates and resource allocation.

The Challenge: Manual review of incoming files for data entry errors was slow and often missed critical issues.

ADP Solution: The department implemented a real-time ADP tool that applied a set of predefined data quality rules upon ingestion. For example, it flagged any DateOfAdmission that occurred after the DateOfDischarge, or any record where Age was outside the range of 0 to 120.

The Impact: Errors were caught and rejected almost instantly, forcing the clinics to correct them at the source. This significantly improved the accuracy and timeliness of the official public health reporting, enabling better predictive modeling and resource deployment for the crisis response team.

Conclusion: The Foundation of Data Trust

Automated Data Profiling is more than a technical utility; it is the cornerstone of data trust. By systematically and efficiently exposing the hidden metadata, inconsistencies, and quality issues within a dataset, ADP transforms an unknown quantity into a known asset. It empowers data teams to stop wasting time manually debugging and start focusing on advanced analytics and insight generation. In an era where data volume and velocity only increase, mastering ADP is no longer optional—it is a mandatory discipline for any organization aiming to build a reliable, high-performance, and trustworthy data pipeline.